What impacts will artificial intelligence and ethics have on health provision and education?

Physician competence is an evolving construct. It changes as the world changes – as medical science evolves. As technology and Artificial Intelligence, evolve. As community need and values evolve, and individual patient knowledge increases

The central question for the Australian Medical Council (AMC) in Artificial Intelligence and Ethics is focused on the challenges and opportunities for AI in medicine and the broader health community:

‘What is the impact of AI on the health workforce of the future? What collective action do we need to take now to ensure that AI models of care strike a balance between delivering on the benefits of efficiency, cost reduction and accessibility of healthcare in health settings in Australia, whilst also ensuring Ethical considerations such as the provision of humanistic care, the goal of increased equity and non-bias treatment of the health community and privacy of health information are not compromised?’

AI and Ethics is a cornerstone of the AMC’s 2018-2028 Strategic Plan. A key outcome of the AMC’s work in this area will be to work with collaborators to further explore the impact of future technologies on health provision, specifically in medical education across the continuum and impacts on Indigenous communities. The AMC will seek to develop ethical standards to guide the appropriate use of technologies in medicine as well as adopting broader support strategies to assist health professionals use AI effectively across the medical professions.

Exploring the ethics argument, Beauchamp and Childress in ‘Principles of Biomedical Ethics’ 1979 articulated four principles:

- Autonomy, or respect for the will of the individual

- Beneficence, or the need to maximise beneficial outcomes

- Non-maleficence, or the ‘first do no harm’ principle of the Hippocratic Oath, and

- Justice or the need to consider equity and the distribution of resources – something that has become a major health priority in Australia and New Zealand.

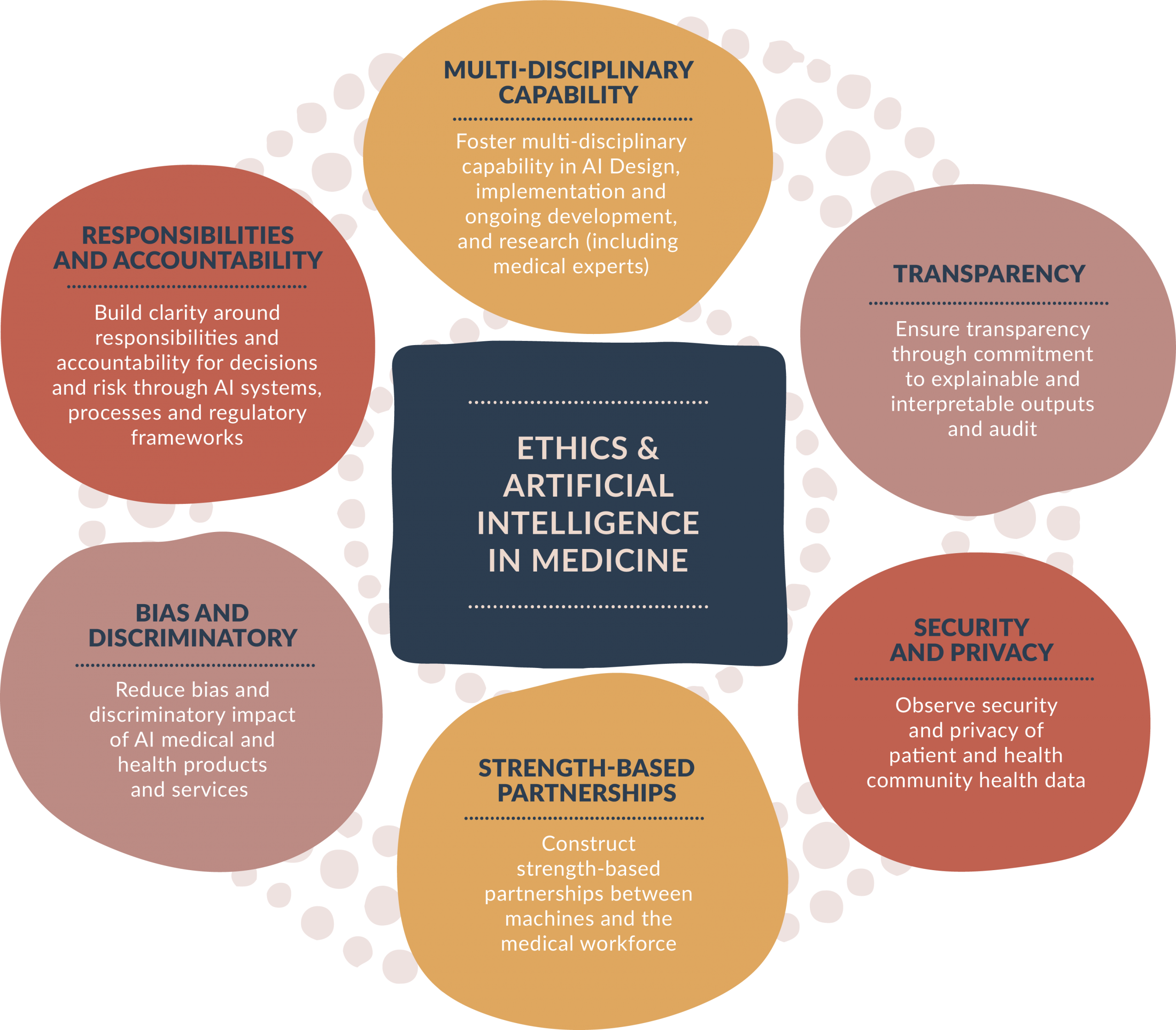

The AMC has incorporated these principles into a framework to build multi-level capability across the sector when engaging with ethics in AI.

Diagram: Framework to build multi-level cross sectoral capability when engaging with ethics in AI

In 2019, the AMC conducted several workshops to explore a range of ethical scenarios which impact the medical profession. AMC is working with partners to consider the possibilities that AI offers to address health service delivery challenges such as improving access to care and addressing workforce shortages through remote delivery of care, decision aid support at point of care, and opportunities for task delegation.

The AMC will continue to work collaboratively with its partners to ensure that the Australian and New Zealand workforce are well place to deal with the challenges and opportunities of AI and Ethics in the future.

In the workshops, AMC explored a range of ethical scenarios which impact the medical profession. One of these scenarios is provided below: What are your responses to the questions? Do these align with results from your colleagues?

Scenario:

Ninety one year old Bill Horta has a pain in his back. He puts it down to rheumatism and tries to ignore it. He has put up with the pain for a few years and does not tell his GP as he already takes over 30 pills for other health conditions he has raised with her over the last two decades of care.

His GP, Dr Mary Gospel, notices that Bill is struggling to get out of his chair after the consultation and hears him call out in pain when he gets to his feet. On examining his back Dr Gospel notices a large growth on his spine and immediately writes a referral for a PET* scan at the local hospital.

24 hours after the scan, Bill receives an SMS to return to the hospital to get the results from his test. He is surprised to get the results so quickly as 10 years back when he was diagnosed with Parkinson’s disease he had waited two weeks for the diagnosis.

On entering the consultation room in the oncology department he is stunned that a robot rolls into the consultation room and says “Mr Bill Horta the results of your test are positive. You have a tumor consistent with spinal cancer and metastatic spinal tumors in the prostate and kidneys. Life expectancy is five months”. Bill remains in the room in silence for a few moments and then gets slowly to his feet muttering “I guess that is the brave new world”.

* PET scan – Positron Emission Tomography

Questions

1. Is it appropriate for robots to deliver bad news?

- Yes

- No

2. Are there other functions, which are more appropriate for the use of AI? Why?

- Pattern Recognition

- Diagnosis

- Other

3. Are there some groups it would be more appropriate for:

- Young people familiar with technology

- People in remote areas

- Other

- No

Answers

1. Is it appropriate for robots to deliver bad news?

2. Are there other functions, which are more appropriate for the use of AI? Why?

3. Are there some groups it would be more appropriate for:

Page Created 29 Oct 2019 | Page Updated 27 Nov 2019